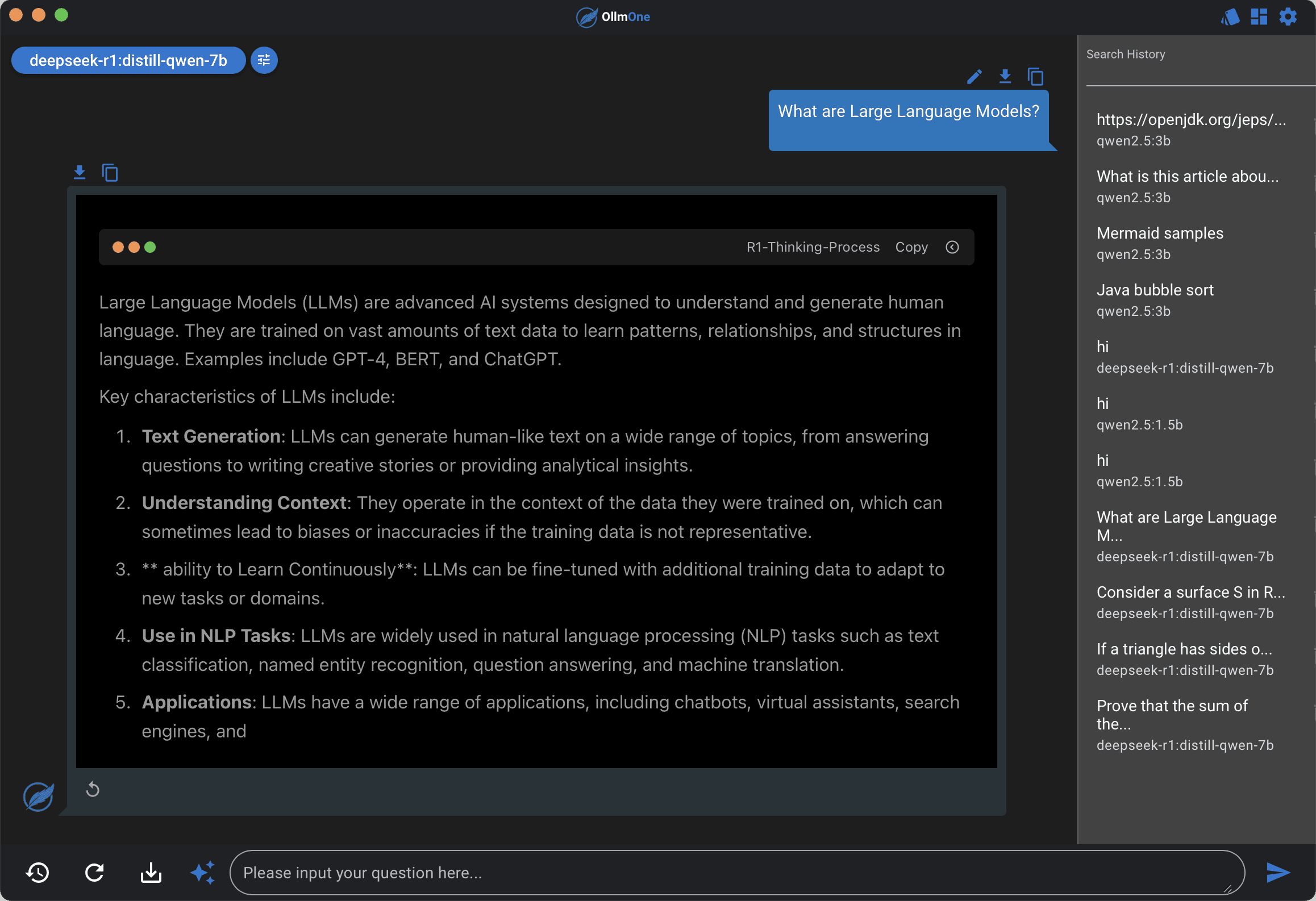

Run LLMs on your PC with ease

Supports popular LLMs like DeepSeek, Qwen, Llama, Gemma, and Phi. Easy to use without configuration, and can run directly on your computer.

Integrate advanced inference capabilities of open-source LLMs

Core Features for Efficient Work

Fast and Convenient AI Features to Meet Diverse Needs

Supports the most advanced reasoning model in the world. DeepSeek R1 is a state-of-the-art reasoning model that can reason about any topic.

Practical Tools for Better Experience

Streamlined Workflows to Make Work Easier

Chat History

Supports Chat History

Stores your conversations locally, enabling you to access, delete, or retrieve previous chats whenever you wish.

Markdown Rendering

Supports Markdown Rendering

Supports Markdown Rendering, including Mermaid Diagram, Katex Formula and Code Highlighting.

Export Chat

Supports Offline Chat Sharing

The offline chat sharing feature allows you to share your chat history offline by exporting it in Markdown or HTML format.

Model Customization

Quantization Level

Supports custom quantization levels to adapt to varying hardware performance.

Model Customization

Adding New Model

Supports model customization through URL, file upload, and direct search from model hubs like Hugging Face.

Prompt Customization

Setting up frequently used prompts.

Supports setting frequently used prompts for quick access, including System Prompts and User Prompts.

Supports Chat History

Stores your conversations locally, enabling you to access, delete, or retrieve previous chats whenever you wish.

Supports Markdown Rendering

Supports Markdown Rendering, including Mermaid Diagram, Katex Formula and Code Highlighting.

Supports Offline Chat Sharing

The offline chat sharing feature allows you to share your chat history offline by exporting it in Markdown or HTML format.

Quantization Level

Supports custom quantization levels to adapt to varying hardware performance.

Adding New Model

Supports model customization through URL, file upload, and direct search from model hubs like Hugging Face.

Setting up frequently used prompts.

Supports setting frequently used prompts for quick access, including System Prompts and User Prompts.

Get Started Today

Boost Your Work Efficiency and Unlock AI Potential. Choose our software to make your work smarter and more productive.

Frequently Asked Questions

If you can’t find what you’re looking for, contact our support team, and we’ll get back to you as soon as possible.

On which operating systems can it be installed?

Currently, we offer versions for Windows and macOS. The Windows version can be downloaded from our official website, while the macOS version is available on the Apple App Store.

Does it support DeepSeek R1 deep reasoning?

Yes, it does.

Why are some models slow to respond?

Large model inference is a highly resource-intensive task and relies on GPU acceleration for faster responses. Performance is typically better on Windows PCs with NVIDIA GPUs and Apple Silicon chips with Metal support, while slower on CPU-only devices. Additionally, larger model parameters (e.g., 7b, 8b) result in slower responses, whereas smaller parameters (e.g., 1.5b, 2b, 3b) provide faster answers.

Is there a trial version, and how long is the trial period?

The Windows version offers a free trial for 30 days. Click the trial button after opening the software to start the trial. The macOS version does not currently offer a trial.